ChatGPT, the large language model that revolutionised the world of artificial intelligence and made it accessible to anyone with a smartphone, is facing increasingly greater competition from rival chatbots such as Google’s Gemini or xAI’s Grok. It remains a leader in this space, recently reaching 810 million weekly active users according to media reports, doubling its user base from January 2025, when it was used by more than 350 million people every week.

This rapid growth has made ChatGPT one of the fastest-growing and most popular apps of all time. Beyond answering basic questions, this tool developed by San Francisco-based startup OpenAI can compose entire essays in seconds, engage in human-like conversations, generate images, solve complex math problems, translate texts, and even write code. However, ChatGPT consumes a lot of energy in the process, reportedly at least ten times more than traditional Google searches.

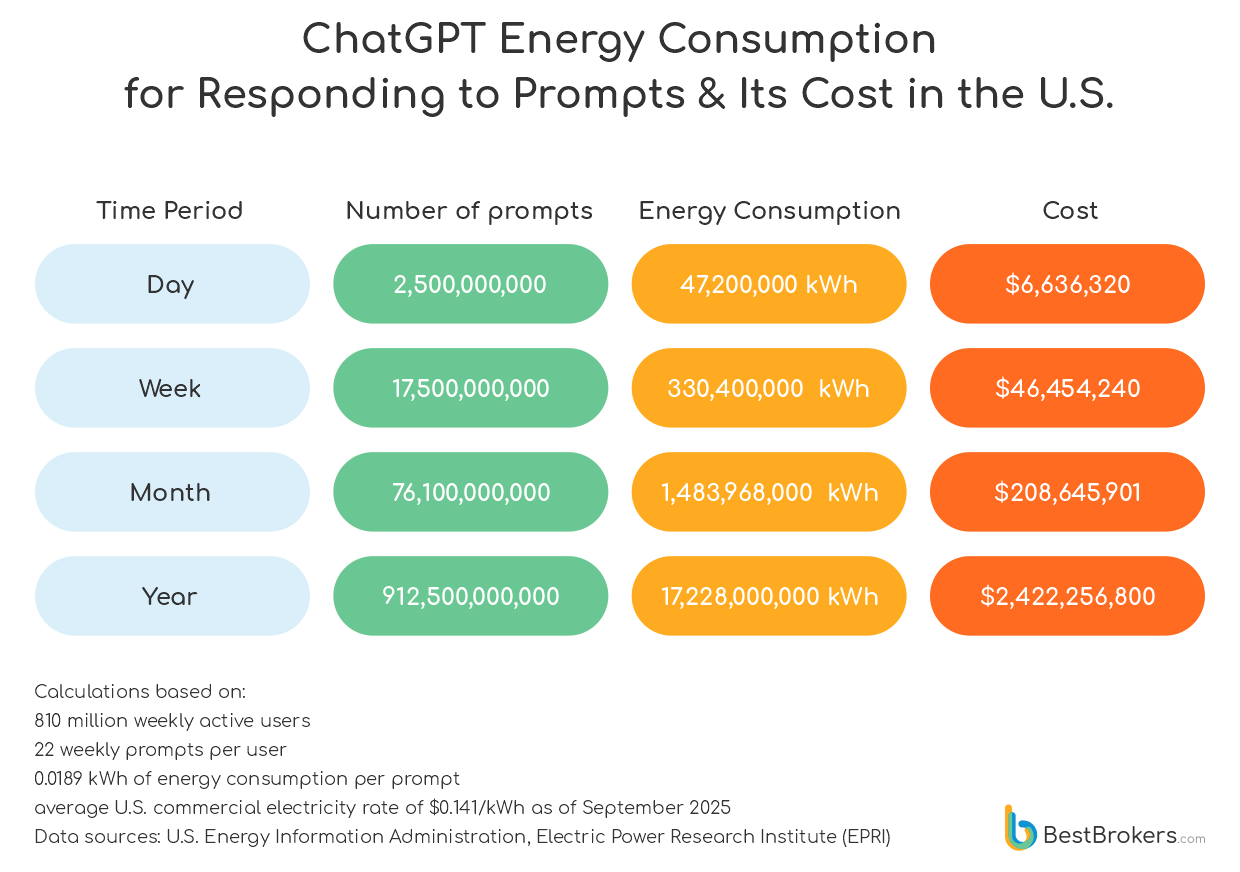

With this in mind, the team at BestBrokers decided to put into perspective the considerable amount of electric power OpenAI’s chatbot uses to respond to prompts on a yearly basis. We also looked at what that would cost at the average U.S. commercial electricity rate of $0.141 per kWh as of September 2025 (the latest rates published by the U.S. Energy Information Administration). After running the numbers, it turned out that for answering questions alone, ChatGPT consumes a whopping 17.23 billion kilowatt-hours on average every year, which amounts to an annual expenditure of $2.42 billion.

I’ll ChatGPT it

When you submit a query to a trained AI model like ChatGPT, the process is known as inference. This involves passing your query through the model’s parameters, which allows the model to spot a pattern and generate a response from the available data. Estimating the energy required for that process is a bit tricky, however, as it depends on many variables, such as query length, number of users, and how efficiently the model runs. In most cases, this information is kept private. Still, we can make some rough calculations to get a sense of the amount of electricity that will be needed.

While the consumption of electricity ranges dramatically between specific models, the current iteration, GPT-5, is significantly more powerful, efficient, and power-hungry than previous models. According to researchers at the University of Rhode Island’s AI lab, ChatGPT-5, which launched on August 7, 2025, consumes anywhere between 2 and 45 watts per medium-length prompt, averaging around 18.9 Wh. The research suggests that the new model could be much more electricity-demanding than its predecessor, up to 8 times more, in fact.

This is over fifty times more than the energy needed for a typical Google search, which consumes about 0.3 watt-hours per query, according to The Electric Power Research Institute (EPRI). People are increasingly using AI chatbots such as ChatGPT to seek information online, with recent findings from a National Bureau of Economic Research (NBER) working paper suggesting this is the most common use for ChatGPT right now. Data shows 19.3% of user conversations with the app can be categorised as ‘getting information’; close to half of all messages (45.2%) fall under the description of ‘use and manipulation’ of information.

On December 4, 2024, OpenAI posted on X that ChatGPT boasted 300 million weekly active users, double the number reported by CEO Sam Altman in September 2023. By September 2025, this number had risen to around 700 million, while by the end of the year, media reports cited a figure of 810 million, with projections for 2026 estimating that the weekly active users, both paid subscribers and paid users, would reach 1 billion.

While this is certainly a success for the company, these 810 million users are generating around 2.5 billion queries per day. To process these queries and generate answers, the chatbot uses roughly 47.2 Gigawatt-hours of energy every day. For context, the average U.S. household uses about 29 kilowatt-hours of electricity per day. This means the power consumed by ChatGPT on an annual basis could easily satisfy the electricity demands of 1.64 million households. Every day, ChatGPT consumes as much power as a country such as Puerto Rico or Croatia.

Over the course of a year, ChatGPT’s total energy consumption works out to 17,228 GWh, an amount enough to respond to about 912.5 billion prompts. This massive use of electricity is equivalent to the power consumption of an entire nation; according to Ember Energy, in 2024, Puerto Rico consumed roughly 18,720 gigawatt-hours, while the electricity demand of Slovenia totalled 14,630 gigawatt-hours, less than what ChatGPT uses to process questions and generate answers.

At the average U.S. commercial electricity rate of 14.06 cents per kWh as of September 2025, this translates to an annual electricity cost of about $2.42 billion, with each query costing approximately $0.0027 to process. Additionally, as OpenAI continues to enhance its chatbots with new features like audio, video, and image generation, these figures could climb even higher.

OpenAI has also developed a series of reasoning AI models, including the current default reasoning model, ChatGPT-5. It is said to be able to handle complex tasks such as writing and reasoning on PhD-level scientific problems, coding, long-form reasoning, and multimodal inputs – all that with much stronger reliability and accuracy compared to previous versions. Such large-language models are designed to spend more time ‘thinking’ before responding, similar to how humans approach complex problems. This enhanced reasoning ability allows them to tackle more difficult tasks, but leads to higher costs and greater energy consumption.

Putting ChatGPT’s massive energy usage for handling user prompts into perspective

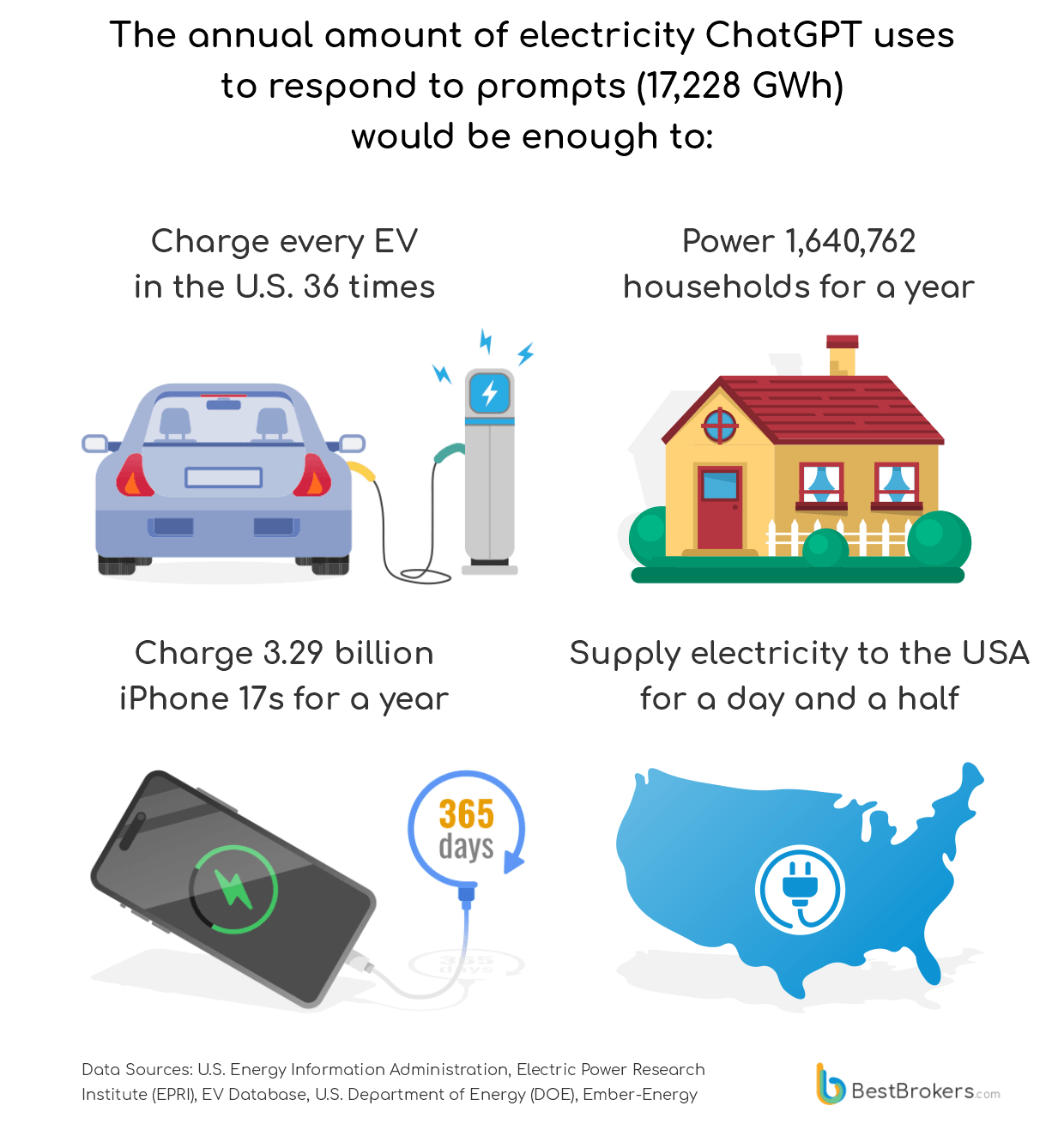

By the end of the second quarter of 2025, the number of electric vehicles on U.S. roads had grown to 6.5 million, according to the Alliance of Automotive Innovation. According to the EV database, the average electric car consumes 0.19 kilowatt-hours per kilometre and can travel about 384 kilometres on a full charge, which means it has a battery that can store roughly 72.4 kilowatt-hours of energy. Therefore, we can calculate that charging all the electric vehicles in the U.S. once would require 470.6 million kilowatt-hours. If we take the total energy ChatGPT uses in a year to generate responses and divide it by that number, it turns out we could fully charge every electric vehicle in the U.S. about 36 times.

Now, let’s look at this from a household perspective. According to the Energy Information Administration (EIA), the average home in the United States consumes about 10,500 kilowatt-hours of electricity per year. That means the energy ChatGPT uses each year to handle requests could power more than 1.64 million U.S. homes for an entire year. While that’s only about 1.25% of the over 132 million households in the country, as per the latest Census data, it’s still a massive amount of energy, especially when you consider that the U.S. ranks third globally in terms of number of households.

Think about smartphones next. iPhone 17, for example, has a battery capacity of 14.351 watt-hours, which amounts to about 5.24 kilowatt-hours needed to charge it every day for a whole year. If we compare this to ChatGPT’s yearly energy-use figure, we’d find that the electricity needed by the chatbot could fully charge 3.29 billion iPhones every single day, for an entire year. That’s an incredible number of phones kept powered up, simply from the energy ChatGPT uses to generate responses.

Furthermore, according to the Carbon Trust, which is a UK-based non-profit dedicated to helping businesses cut carbon emissions, one hour of video streaming in Europe consumes about 188 watt-hours of energy. In contrast, ChatGPT spitting out information for just one hour uses approximately 2 billion watt-hours. That means that to match the energy consumption of ChatGPT’s hourly operation, you’d need to stream video for a whopping 10.5 million hours, which is more than a thousand years.

And here’s where it gets even more interesting. Using the latest electricity demand data from energy think tank Ember Energy, we identified dozens of nations, including Slovenia, Kenya, Costa Rica, Estonia, and Luxembourg, which separately consume less energy annually than the amount ChatGPT uses solely to process user queries. In fact, based on our calculations, ChatGPT’s yearly energy usage to handle prompts could power Brazil, which consumed 760.83 terra-watt-hours in 2024, for a little over a week. It is also enough to satisfy the electricity demands of South Korea or Canada for 10 days, or even those of the United States for 34 hours. For China, the world’s largest electricity consumer, it would mean that the power drained by ChatGPT for prompts alone could keep the lights on for nearly 15 hours.

The Training Costs of ChatGPT

Training large-language models (LLMs) is a highly energy-intensive process as well. During this phase, the AI model ‘learns’ by analysing large amounts of data and examples. This can take anywhere from a few minutes to several months, depending on data volume and model complexity. Throughout the training, the CPUs and GPUs, the electronic chips designed to process large datasets, run nonstop, consuming significant amounts of energy.

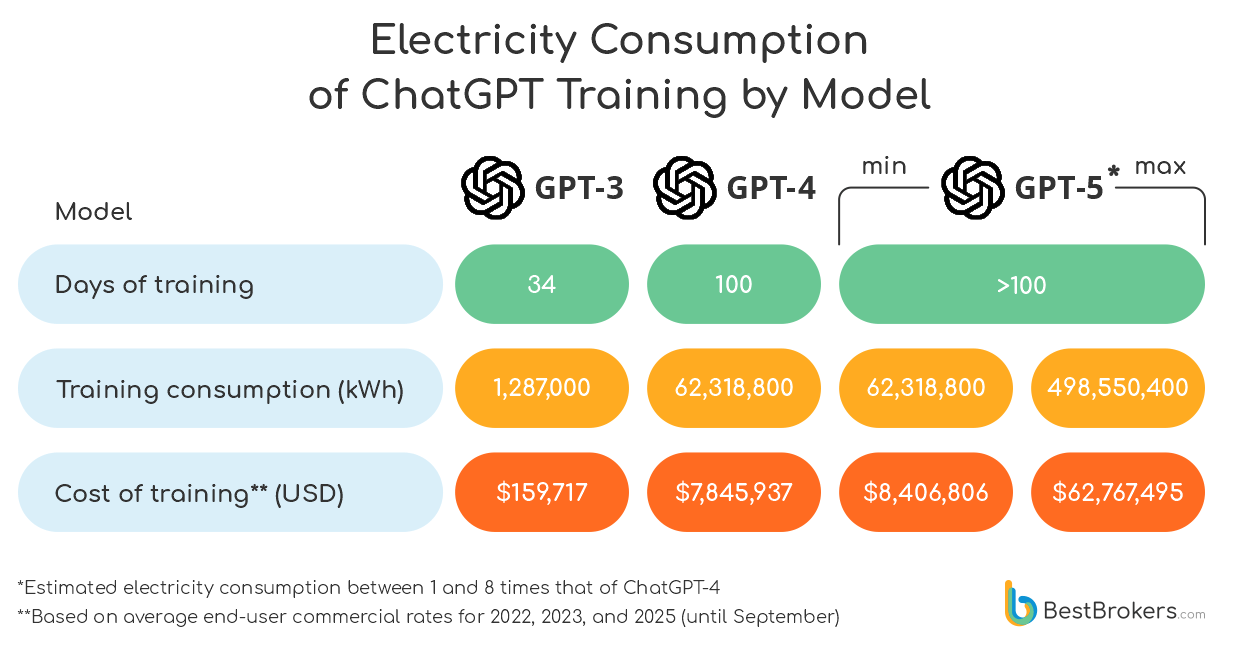

For instance, training OpenAI’s GPT-3, with its 175 billion parameters, took about 34 days and used roughly 1,287,000 kilowatt-hours of electricity. But as models evolve and become more complex, their energy demand increases as well. Training the GPT-4 model, with over 1 trillion parameters, consumed around 62,318,800 kilowatt-hours of electricity over 100 days, 48 times more than GPT-3.

The current model, GPT‑5, which was officially released on August 7, 2025, is said to be much more powerful than previous iterations, with training data unavailable. In fact, OpenAI has not shared training times because they can reveal key information about the GPU cluster scale, hardware efficiency, compute budget, model architecture, and cost structure. Typically, to estimate power and water consumption, as well as the carbon footprint of a certain AI model, researchers use information about the number and type of GPUs used for training, training compute (FLOPs), training dataset size (tokens), and scaling patterns. However, for models such as GPT-4 and GPT-5, this information is not public.

It is nearly impossible to calculate the electricity consumption for training the latest model. What most experts believe is that this was not a one-time event, but took many months to complete over multiple retraining phases. Moreover, this complex process used enormous distributed GPU clusters.

In fact, it is the new training method that truly sets OpenAI’s GPT-5 and o1 models apart from their predecessors. The o1 model marked a turning point in the shift from general-purpose language generation to specialised reasoning systems. Released in late 2024, o1 was engineered to ‘think before answering,’ using additional compute during inference to generate structured internal reasoning steps. This approach delivered major gains in complex problem-solving, particularly in mathematics, coding, and logic, while accepting slower output and higher compute costs. Trained with a mix of large-scale pre-training and intensive reinforcement-learning optimisation, o1 became OpenAI’s first model explicitly built for accuracy and stepwise reasoning rather than speed or conversational fluency.

GPT-5 builds directly on this foundation, extending o1’s reasoning architecture into a broader, more capable system. While GPT-5 serves as a fast, general-use model, it can route difficult tasks through a deeper ‘thinking’ engine descended from o1’s methodology, blending responsiveness with high-precision reasoning when needed. GPT-5 integrates these abilities more seamlessly and at a far greater scale. Of course, this ‘chain of thought’ may be similar to how humans tackle problems step-by-step, but it also comes at a much higher cost – up to 8 times what GPT-4 consumed in terms of power (the maximum electricity consumption we used for our calculations, although it may be much higher).

Estimating OpenAI’s Profits from Paid Subscriptions

While the 2.5 billion daily user queries may cost OpenAI close to $2.4 billion per year, this is nothing compared to the company’s revenues from paid subscriptions. Last September, OpenAI COO Brad Lightcap said that ChatGPT had exceeded 11 million paying subscribers, of which a million were business-oriented plans, i.e. Enterprise and Team users, while the rest, roughly 10 million people, were paying for the Plus plan.

In December, the $200/month Pro plan was made available; within a month, Sam Altman admitted in a post on X that the company was losing money on the Pro subscriptions. It turned out that the people were using the Pro perks more than the company had expected. Now, OpenAI has not disclosed the number of Pro subscriptions or how much the services included in the plan actually cost them. So, how much is OpenAI profiting from paid subscriptions?

In November, The Information revealed that ChatGPT had roughly 35 million paying subscribers over the summer. Citing internal sources, it wrote that the company planned to increase this figure to 220 million by 2030. Just for reference, Spotify reported having 281 million premium subscribers globally as of the third quarter of 2025; Netflix had around 300 million paid members at the end of 2024. This suggests OpenAI’s ambitions stretch way beyond its already dominant position on the market – it clearly aims to transform ChatGPT from a powerful niche tool into a mass-market subscription service on par with the world’s biggest consumer platforms.

The reported 35 million paid subscribers include users across various subscription tiers, such as the consumer-focused ‘Plus’ plan (typically $20/month) and the business-focused ‘Pro’ ($200/month), ‘Enterprise’ (up to $60/month), ‘Team’ ($25/month), and ‘Edu’ (up to $50) plans.

Based on past statistics, we can make some rough estimates of how many users pay for what exactly. Here is a breakdown of what OpenAI might be making from paid subscriptions as of December 2025:

- 1 million Enterprise users paying $60/month

- 2 million Team users paying $25/month

- 1 million Pro users paying $200/month

- 500 thousand Edu members paying $50/month

- 30.5 million Plus users paying $20/month

This means that the company could make around $955 million from paid subscriptions every month or around $11.46 billion per year. To put things in perspective, this is nearly 5 times the estimated annual electricity costs from processing user queries. The annual energy costs ($2.42 billion) could easily be covered with less than three months’ worth of revenue. These are, of course, rough estimations based on publicly available information; not much has been confirmed by the company, however.

Bear in mind that the estimations for the number of paying subscribers are quite conservative, including for the Pro users. The electricity costs, on the other hand, are likely much lower if the company uses its own renewable energy sources such as photovoltaics and uses electricity from the grid at preferential rates (which it almost certainly does).

Methodology

To estimate the number of queries ChatGPT handles each week and the cost of these operations, the team at BestBrokers based the calculations off of recent reports, including by Sam Altman, who said that ChatGPT had already reached 800 million weekly active users (WAU), while the AI model was processing roughly 2.5 billion prompts per day. By December, media reports, including TechCrunch, referred to a slightly higher number – around 810 WAUs.

With these figures in hand, we calculated the daily electricity consumption of ChatGPT by multiplying the total number of queries by the estimated energy usage per prompt, which is 18.9 Wh, according to the University of Rhode Island’s AI lab. From there, we extrapolated the energy consumption on a monthly and yearly basis. To determine the associated costs of this electricity usage, we applied the average U.S. commercial electricity rate of $0.141 per kWh as of September 2025.